What is the robots.txt?

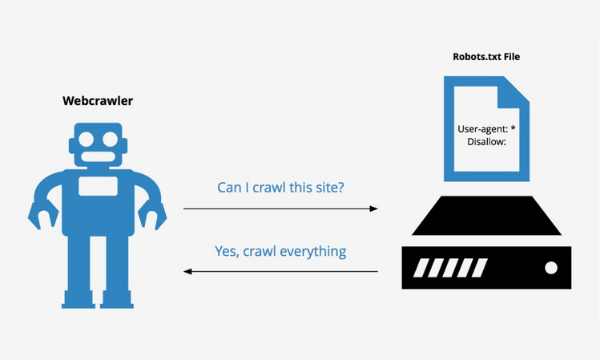

The Robots.txt is a publicly accessible text file that can be found at the root of the webserver. It is an important file because it tells you which parts of a website a search engine crawler, such as Googlebot for Google, is not allowed to visit. The rules that are set can significantly affect the performance of the website. If a website has not placed robots.txt, a search engine will crawl the entire website.

When a search engine crawler visits the website, it first checks for the existence of a Robots.txt file in the root of the domain. This text file is part of the Robots Exclusion Protocol (REP). This protocol was established to regulate how robots are allowed to crawl and index the web. For example, you can use the robots.txt to exclude that a search engine can crawl and index certain subfolders of the CMS. It is good to realize that the guidelines in the robots.txt are strict signals and not obligations for a search engine. Thus, a search engine may choose to ignore portions of the robots.txt.

Table of Content

Robots.txt and SEO

Because of the direct link with the crawlers of search engines, the robots.txt is an important file for SEO specialists. For SEO it is important that the important pages of a website are accessible and can be indexed. In addition, it is ensured that the non-important pages are not paid attention by a crawler. The robots.txt can be crucial in this. A single line in the robots.txt can prevent a large part of the website from being indexed.

User agents and Robots.txt

The robots.txt indicates how search engines should deal with the website. This can be further specified per search engine crawler, also called User Agent. You can set separate rules for each User-Agent. The most well-known User Agents are:

- Google bot (Google)

- Googlebot Image (Google images)

- Bingbot (Microsoft Bing)

- Slurp (Yahoo)

- Baiduspider (Baidu)

- DuckDuckBot (Duckduckgo)

- Applebot (Apple)

- * (all User Agents)

The above are just the most well-known user agents, but there are hundreds of other bots that crawl websites.

Example robots.txt

A robots.txt is built by indicating which guidelines apply per User-Agent. This can be done by addressing all User-Agents with a *, or by naming the User-Agent specifically. For the robots.txt, Googlebot and Bingbot are governed by a more specific rule. An example of a robots.txt is as follows (for WordPress):

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

The above example states that all User-Agents (User-agent: *) are not allowed to crawl and index anything in the wp-admin folder (Disallow: /wp-admin/). The more specific line below that (Allow: /wp-admin/admin-ajax.php) works like a filter in this case. This will allow crawlers to access the admin-ajax.php file.

Do you want certain files or images to be skipped? Then you indicate this in a specific line:

User agent: *

Disallow: /private/

Common mistakes with robots.txt

A robots.txt therefore provides guidelines to a crawler. Unfortunately, errors in these files are common, which can have serious consequences for the website and traffic. Below are some of the most common robots.txt errors.

- Private files are indexed by certain search engines anyway . A robots.txt contains guidelines that can be ignored by a bot, especially the less respected bots. It is therefore no guarantee that a folder will not be visited by a bot. If you really want to protect files or folders, other methods such as a login with password are better.

- A disallow rule has been set, but my file/page is still in the search results . Just because a file or folder is excluded via a robots.txt does not mean that a crawler will never find and index it. This is because it is possible to link to these files and folder via other websites. Therefore, using the noindex meta directive is recommended to exclude pages from indexation.

- The entire website will be excluded by entering ‘Disallow: /’ . As a result, no folder or file behind the root may be crawled and indexed. Make sure not to exclude parts of the website that you want to show in the search results.

- Absolute URLs or URLs from other domains are used . A robots.txt only applies to the domain it is placed on and only accepts relative URLs for subfolders or files (except for the sitemap). It is not possible to refer to another site with absolute URLs.

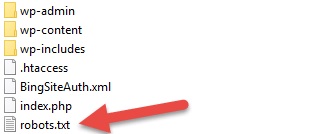

- The robots.txt is located in a subfolder of the domain . The robots.txt should be in the root of the domain.

- A reference to a trailing slash URL if it doesn’t have one.

- Start a Disallow line without a slash.

- Capitalize a URL if it doesn’t have one (or vice versa).

- Place the sitemap reference through a relative URL. The reference to a sitemap must be through an absolute URL.

Robots.txt: Best Practices

For the robots.txt there are standard rules that it must comply with. Still, there are some best practices when creating a robots.txt:

- Exclude certain malware bots via a separate rule:

User-agent: NameOfBot

Disallow: /

- The robots.txt is publicly accessible so do not write down names of private folders.

- It is recommended to place a reference to the sitemap in the robots.txt. This way a crawler is always aware of the location of the sitemap.

Sitemap: http://www.example.com/sitemap.xml

- The order of the rules matters. By default, the first line is always leading. Winning specific rules apply to Googlebot and Bingbot.

Create robots.txt

Creating a robots.txt sounds complicated, but luckily it’s quite simple. There are a number of ways to create a robots.txt.

Generator

It is possible to create and download robots.txt files via so-called Robots.txt generators. Examples of such generators are:

- Ryte

- SEOBook

- SEOptimer

Yoast / Rank Math

The popular WordPress plugins Yoast and Rank Math make it easy to create a robots.txt for WordPress websites. From the tool you can create a robots.txt and adjust it if necessary.

Manually

Of course you can also create a robots.txt manually. This will create a new file on your PC using a program like Notepad. Here you process the desired lines and save them as robots.txt. Make sure to save the file in a .txt format. Finally, you place this file in the root of your website via an FTP client.

Robots.txt tester

If you create a robots.txt manually or via a generator, it is wise to validate it via a robots.txt tester. Google has made its own robots.txt testing tool available for this. It only works for proportions validated by Google. Outside of Google, there are also tools available to test the robots.txt. For example, consider the online tool of TechnicalSEO.